Shaky

chip makes for bug-eyed bots

By

Chhavi Sachdev ,

Technology Research NewsTechnology often borrows from nature. Robots, for example, are often designed to imitate humans. One group of robot vision researchers, however, is borrowing from a much less expected source: a creature with eight legs, as many eyes and the potential to frighten many a human.

Conventionally, the more photoreceptors a vision system has, the higher the quality of its vision. The jumping spider, however, does things differently, and researchers at the California Institute of Technology are following along with an eye toward improving robot vision.

The research counters conventional wisdom by enabling high resolution robot vision while using fewer photoreceptors. The principle underlying the system is simple: the sensor moves like the retinas of the jumping spider. The research could lead to visual sensors that are smaller, use less power, and require less computer processing than traditional robot vision systems.

The jumping spider is noted for its unusually good sight, making it the ideal model for robotic visual sensors, according to the researchers.

The spider sees so well by rotating its retinas in a linear orbit -- like the minute hand of a wristwatch ticking clockwise - and, at the same time, sweeping them back and forth like a pendulum. This allows it to see much better than other invertebrates and even rival human visual acuity, though it has only about 800 photoreceptors in each of its two scanning retinae. Compare this with the human eye, which has 137 million photoreceptors, and standard digital cameras, which use up to a million receptors.

The researchers’ vision system is based on a chip containing 1,024 photoreceptors, or pixels, arranged in a 32 x 32 array and spaced 68.5 microns apart. Each receptor is also 68.5 microns wide. The chip measures 10 square millimeters, or a little over 3 millimeters on a side.

Following the spider’s lead, the researchers devised two different systems. In the first design, the photoreceptors image a circular scanning path by spinning a tilted mirror in front of the focusing lens. The output of this imaging “is extremely easily measurable and regular,” said Ania Mitros, a graduate student at Caltech. The system uses 60 to 100 milliwatts to power the scanning motion, making it suitable for mid-sized robots, according to the researchers.

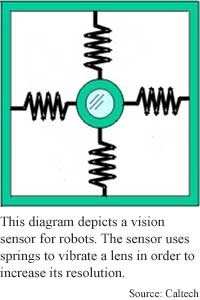

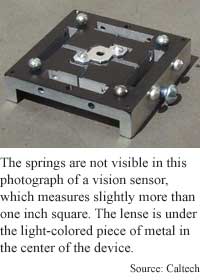

The second design uses even less power by harnessing the motion of the robot itself to vibrate springs that cause a sideways and backward movement of the lens while keeping the chip a fixed distance away. The continuous vibrations allow the pixels to measure the distribution of light intensity at various locations. The entire system measures just over a square inch.

“[It] is appropriate for platforms with a lot of inherent high frequency vibrations such as helicopters and where power is scarce such as …flying robots,” said Mitros.

Because both systems remain in constant motion, they scan objects in a path rather than by measuring the light distribution at fixed points like other vision systems, said Mitros. This means the pixels are tracking changes in light intensity over time, which allows the system to process the signal from each pixel independently.

Vision systems collect huge amounts of data, much of it redundant. Having fewer photoreceptors leaves more room for processing the information on the chip, which cuts down the amount of pixel data before it has to be transmitted.

Processing each photoreceptor’s output independently also helps eliminate fixed-pattern noise, or interference from mismatches in processing circuitry and photoreceptors.

The bad news is that the method isn’t infallible. Each moving pixel in this system can see a line as well as 49 standard static pixels can. "However, since the scan path isn't necessarily going to cover every single point in space, you might still miss a dot of the same diameter as a detectable wire," said Mitros.

Slowing the circular scanning motion by a factor of two, will allow the sensor to detect a feature that's twice as narrow, but there is a tradeoff. “If something moves within the image before we finish scanning our local area, we'll get blurring,” she said.

The vibrating system doesn’t have this problem, but because its motion is irregular, it is not guaranteed to cover every point in space.

The researchers have designed a second version of the chip that they plan to test in a robot by this summer, Mitros said. The researchers’ visual sensor design could eventually be used in any robot that moves and gathers information, be it in seek and find missions or in explorations of another planet’s surface.

“Researchers working on [small flying] vehicles are interested since small, very low-power sensors are hard to come by,” said Mitros.

The ultimate goal of implementing real-time robotic navigation tasks and feature detection using this scheme could take 10 years, however. “Really navigating well in a natural environment requires solving multiple tasks: route planning, object identification, [and] obstacle avoidance,” said Mitros.

Mitros's research colleagues were Oliver Landolt and Christof Koch. They presented the research at the Conference on Advanced Research in Very Large Scale Integration (VLSI) in Salt Lake City, Utah, in March 2001. The research was funded by the Office of Naval Research (ONR), the Defense Advanced Research Projects Agency (DARPA), and the Center for Neuromorphic Systems Engineering, as part of the National Science Foundation (NSF) Engineering Research Center Program.

Timeline: 1 year

Funding: Government

TRN Categories: Computer Vision and Image Processing; Robotics

Story Type: News

Related Elements: Technical paper, "Visual Sensor with Resolution Enhancement by Mechanical Vibrations", Proceedings, Conference on Advanced Research in Very Large Scale Integration (VLSI) in Salt Lake City, Utah, March 2001: http://www.klab.caltech.edu/~ania/research/LandoltMitrosKochARVLSI01.pdf.

Advertisements:

April 11, 2001

Page One

Glass mix sharpens holograms

Material bends microwaves backwards

Shaky chip makes for bug-eyed bots

Cold plastic gives electrons free ride

Holographic technique stresses interference

News:

Research News Roundup

Research Watch blog

Features:

View from the High Ground Q&A

How It Works

RSS Feeds:

News

Ad links:

Buy an ad link

| Advertisements:

|

|

Ad links: Clear History

Buy an ad link

|

TRN

Newswire and Headline Feeds for Web sites

|

© Copyright Technology Research News, LLC 2000-2006. All rights reserved.