Virtual

view helps run tiny factory

By

Chhavi Sachdev,

Technology Research NewsConstructing a machine from parts smaller than the human eye can see is one of the biggest challenges in micromachinery.

Precision is difficult because the machines assembling the parts are huge in comparison with the products they fabricate. Because the work space is so cramped, small movements must be precise so that the tools do not collide, and the force applied by the tools must be well-controlled so parts are not crushed.

In addition, because the whole process is so small, it is difficult to see that tasks are being performed correctly.

To address these problems, a team of researchers in France and Japan has designed a desktop microfactory that allows a person to set up microassembly processes by walking through a virtual assembly line.

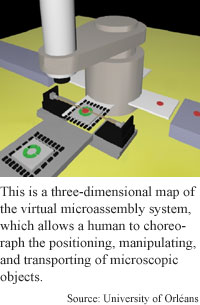

The system provides a human operator with a virtual, three-dimensional map of the scene, said Antoine Ferreira, an associate professor at the Vision and Robotics Laboratory of Bourges in France. The virtual interface is a simulation of the microworld that represents all the tasks of microfactory processing.

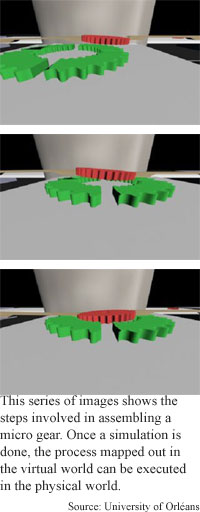

In the planning stage, the operator configures the positioning, measurement, handling and movement of all the different parts by using the interface to verify the feasibility of semi-automatic tasks such as assembling the gears of a watch. The tasks can then be carried out in a continuous cycle.

The simulation allows the operator to estimate the accuracy, cycle time, and physical limits of the automated assembly of microelectromechanical systems (MEMS) before they are implemented in a microfactory

The human operator, as in a macroscopic factory, “is a supervisor controlling the…on-line execution of the assembly tasks through the VR interface,” said Ferreira. If an object were damaged, or a microtool broken, the operator could switch to manual mode to fix it, he said.

Using the microfactory simulation to plan ahead speeds up the microassembly process and makes it more efficient, said Ferreira. "In terms of reduction of execution time, lost energy, reduction costs, [and] precision of the assembled parts, there is a reduction factor ranging from 10 to 50," Ferreira said.

Research in this area has been ongoing for quite a while but the simulations are not suitable for practical use yet, said Yu-Chong Tai, an associate professor of electrical engineering at The California Institute of Technology. “Demoed micro-machine tools by Japan are far from being satisfactory in terms of performance. Big machine tools are still better in terms of accuracy and ease of use,” he said.

The microfactory system would be used "for fast micro-prototyping, but this will not be real for industry for many years to come," Tai said.

The system is clearly feasible, said Russell Taylor, a research associate professor of computer science, physics and astronomy, and materials science at the University of North Carolina at Chapel Hill.

The researchers' contribution to the field "is in their closed loop position/force feedback system that moves objects from one location and orientation to another," Taylor said. "Their system is not what I would call VR [since] it is not immersive, but standard through-the-window, two-dimensional computer graphics," he said.

Several different kinds of virtually-assisted microsystems could be in use within the next five years, Ferreira said. The simulation could be used to assemble products like watches, very small circuit board components, and microprocessors. It could also be used to manufacture microsensors, cellular phone parts, and eventually even microrobots and micromachines, he said.

The researchers' next step is to improve the system’s accuracy. The current system is accurate to within three microns. They are also aiming to join together the processes that they have demonstrated separately. Watches, for example, are assembled from several dozen hybrid parts. The researchers are aiming for an automated microfactory that handles, conveys, manipulates, and assembles all the different micro-sized objects in one process.

Ferreira's colleagues were Jean-Guy Fontaine at the Vision and Robotics laboratory at the University of Orléans, France, and Shigeoki Hirai at the Micro-Robotics Lab of the Electrotechnical Laboratory, in Tsukuba, Japan. The research was funded by the Japanese National MITI Micromachine project, and the Higher Engineering School of Bourges. The research was presented at the 32nd International Symposium on Robotics, April 19-21, 2001 in Seoul, Korea.

Timeline: > 5 years

Funding: government; University

TRN Categories: Microelectromechanical Systems (MEMS); Robotics; Data Representation and Simulation

Story Type: News

Related Elements: Technical paper, "Visually servoed force and position feedback for teleoperated Microassembly based virtual reality," proceedings of the 32nd International Symposium on Robotics, April 19-21, 2001, Seoul, Korea.

Advertisements:

July 18, 2001

Page One

HP maps molecular memory

Cartoons loosen up computer interfaces

Virtual view helps run tiny factory

Bioengineers aim to harness bacterial motion

Lasers spin electrons into motion

News:

Research News Roundup

Research Watch blog

Features:

View from the High Ground Q&A

How It Works

RSS Feeds:

News

Ad links:

Buy an ad link

| Advertisements:

|

|

Ad links: Clear History

Buy an ad link

|

TRN

Newswire and Headline Feeds for Web sites

|

© Copyright Technology Research News, LLC 2000-2006. All rights reserved.