Interactive

robot has character

By

Eric Smalley and Susanna Space,

Technology Research NewsCombine some of the most advanced human-computer interaction technology with one of the oldest forms of entertainment -- puppetry -- and you get Horatio Beardsley.

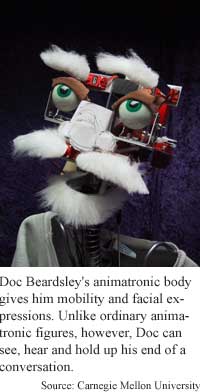

Doc Beardsley is an animatronic robot, a descendant of the mechanical humans and beasts that rang bells and performed other actions as parts of the clocks of medieval European cathedrals. Modern science, however, has carried Doc far beyond these ancient automata, endowing him with the ability to see, understand spoken words and carry on a conversation.

Researchers at Carnegie Mellon University made the amusing, forgetful inventor as a literal embodiment of a computer interface. Doc performs for audiences, answering questions about himself. He claims to have been born on a mountaintop in Austria to a family of goatherds, and to have invented endless bread, the milkbed, the antisnooze and the foon.

In addition to paving the way for a future generation of theme park animatronic characters, the technology could lead to embodied personal digital assistants with personalities, interactive electronic pets, animated historical museum figures, and robotic waiters and salespeople, said Ron Weaver, a graduate student at Carnegie Mellon.

Several layers of software drive Doc's apparent wit. Synthetic interview software, which includes speech recognition abilities, allows Doc to react to spoken questions. The technology, developed at Carnegie Mellon for use with video characters, gives a character sets of lines to deliver on given topics. This allows Doc to give appropriate answers to questions that match an anticipated query closely enough, said Weaver.

If the question hasn't been anticipated, another layer of software takes over. A discussion engine tracks the questions and answers during a conversation and allows Doc to make relevant comments by keying off individual words even if he doesn't understand a specific question, said Weaver. And if that doesn't work, the discussion engine tosses the conversation back to the questioner, he said.

"The discussion engine will first try to deliver a comment that is still relevant based on whatever individual keywords can be found in the text," said Weaver. "Failing that, the character gives a random comment that either pretends to reflect what is being discussed to try to keep [the conversation] going, or transfers the onus of the conversation back to the guest."

The researchers made Doc forgetful to help keep his lapses from breaking the illusion of a realistic personality. Here's an actual exchange:

Questioner: "How are you feeling today?"

Doc: (pause) "My-- my dog just died. No, I'm just kidding. He just got run over. He's still alive. Well, it's a she really. I just refer to her as a boy to bust her chops, keep her honest, you know?"

Questioner: "What's your best invention?"

Doc: "Well, you know, there was this one invention I made accidentally while working on genetic recombination. I crossed a spoon with a fork and thus created the foon. It was used either as a spoon or a fork."

Microphones and cameras that track the sounds and movements of an audience provide Doc's audiovisual perception, said Todd Camill, a research engineer at Carnegie Mellon University's Robotics Institute. "Microphones listen for sounds made by people in the room, and a camera subsystem tracks the movement of people by finding areas of skin tone in the room. The audio and vision systems generate position data that tell Doc where to turn his head."

One aspect of making Doc Beardsley a believable character is keeping the technology in a supporting role, according to Tim Eck, another Carnegie Mellon graduate student. "Character and story are the most important aspects to creating believable, entertaining characters," he said. "We are striving to provide the illusion of life, to create an entertaining experience, which is an important distinction. We are not trying to create artificially intelligent agents. We are creating the illusion of intelligence with time-tested show business techniques: drama, comedy, timing and the climactic story arc."

As with many creative endeavors, serendipity plays an important role. "From time to time, we find ourselves caught off guard by conversations that seem to make sense in ways we did not intend," said Camill. "For example we've recently heard this exchange:

Guest: 'Doc, why are you wearing a Carnegie Mellon University sweatshirt?'

Doc: 'I've spent time at many universities. You'd be surprised at the things they throw away.'"

In addition to using traditional storytelling and theatrical techniques, the researchers are studying the human side of human-computer interaction. "Since our goal is the illusion of human intelligence or intent in the service of a story, a large part of our results concern the human audience rather than the robot," said Camill. "We are exploring the social dynamics between human and machine by exploiting the tendency of people to project human qualities on the objects around them."

From the entertainment perspective, the ultimate goal is creating synthetic characters that seem to possess dramatic human qualities, like a sense of humor, comic timing, personal motivations and improvisation, said Camill. "When an audience can get so engrossed in interacting with Doc's dialogue and story that the technology is completely forgotten, then we know we have accomplished our goal," he said.

The next steps in the project are improving the character by adding skin and a costume, building a set and props, creating a show, building puppeteering controls for the props, and writing software for producing other shows, said Camill.

The technology is not yet ready for the entertainment industry, said Eck. "The main reason [is] speech recognition technology. We believe once the overall accuracy of speaker-independent speech recognition is 80 percent or higher, applications such as ours will be seen in the entertainment industry. This will be approximately 5 to 8 years from now," he said.

The research is funded by the Carnegie Mellon University.

Timeline: 5-8 years

Funding: University

TRN Categories: Robotics; Artificial Intelligence

Story Type: News

Related Elements: Project website: http://micheaux.etc.cmu.edu/~iai/web/newIAI/doc.html

Advertisements:

March 6, 2002

Page One

Interactive robot has character

Atomic cascade broadens laser

Biology harbors hidden complexity

Heat engines gain quantum afterburner

Nanotubes branch out

News:

Research News Roundup

Research Watch blog

Features:

View from the High Ground Q&A

How It Works

RSS Feeds:

News

Ad links:

Buy an ad link

| Advertisements:

|

|

Ad links: Clear History

Buy an ad link

|

TRN

Newswire and Headline Feeds for Web sites

|

© Copyright Technology Research News, LLC 2000-2006. All rights reserved.