Nanocomputer skips clock

By

Eric Smalley,

Technology Research NewsThe boom in nanotechnology research is likely to lead to computer components that are as small as individual molecules, which should make for extremely powerful computers.

But such computers are likely to require new designs. Even if it proved possible to push today's chip manufacturing processes to make computer circuits at the molecular scale, doing so would probably not be economical. Many researchers are turning to simple components that assemble automatically.

Researchers from Communications Research Laboratory (CRL) in Japan have come up with a design for nanocomputers that would use less power, dissipate less heat, require less wiring, and be more reconfigurable than existing proposals.

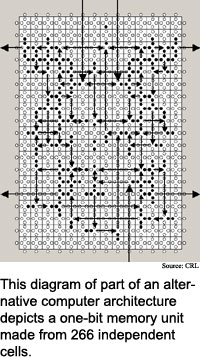

The design is a type of cellular automata, which are large arrays of simple, identical components, or cells. Each cell can be switched between two states that can represent the 1s and 0s of computing. The cells communicate via signals generated by chain reactions along lines of cells.

The promise of cellular automata is that the cells can be made in bulk using inexpensive chemical synthesis rather than the relatively expensive photolithography process used to make today's computer chips. "Simplicity of the cells is an extremely important issue for this work to be [practical]," said Ferdinand Peper, a senior researcher at Communications Research Laboratory and a visiting professor at Himeji Institute of Technology in Japan.

The square cells in the researchers' design contain four bits -- one on each side -- and nine rules govern interactions between cells. The rules control the flow of information through an array of cells, and are comparable to the basic logic operations of today's computer chips. The researchers have combined the rules to derive a for-loop. A for-loop, which repeats a set of instructions a given number of times, is a basic element of computer programs.

The key to the architecture's advantages over existing proposals is that its circuits can handle randomly timed signals rather than requiring that all signals be synchronized by a central clock. Nearly all computer processors include a clock that coordinates the processor's workings by sending a time signal throughout its circuits. As computers become faster, it becomes more difficult and takes more energy to coordinate everything using a clock signal.

The no-clock architecture fits well with cellular automata, which are inherently asynchronous. "Our method to compute on asynchronous cellular automata boils down to simulating... delay-insensitive circuits on the cellular automata," said Peper.

Previous proposals for computing with asynchronous cellular automata called for simulating synchronous communications, but this makes cells and cell interactions more complicated, said Peper. Computers constructed using these architectures would require more power and generate more heat than asynchronous machines, he said. "Many cells [would] have to actively switch even when they carry out no useful computations," he said.

Researchers have been developing asynchronous computer circuits for decades, and there are commercial asynchronous computer chips, but these circuit designs are not appropriate for cellular automata, said Peper. "We were able to [design] delay-insensitive circuits that are more efficient for our purposes than the delay-insensitive circuits used with solid-state electronics," he said.

The efficiency arose from thinking about programming rather than circuits, said Peper. "It became clear to me that it would be possible to write computer programs in terms of delay-insensitive circuits, which in turn could be laid out on asynchronous cellular automata," he said.

Computers made using the design would be able to process highly parallel applications as many as one billion times faster than today's computers, said Peper. These applications include artificial intelligence, which typically requires checking many possibilities in a search tree, simulations of neural systems, which employ a large number of neurons working in parallel, and simulations of physical systems, which project the interactions of large numbers of particles, he said.

The speed increase for applications with little inherent parallelism will be less -- "probably at most 100 times," said Peper. "However, it all depends very much of the technology used," he said.

The architecture could eventually be used to produce high-performance, low-power wearable computers running artificial intelligence applications that will supplement human communication and intellectual abilities, said Peper. "A high computational power is needed to process and interpret the signals received from humans," he said.

The researchers plan for the next two years is to work on simplifying the cells in order to make them easier to manufacture. The researchers are also looking to build a prototype computer using conventional technology to test the architecture, said Peper. This prototype will be ready in five to seven years, he said.

The researchers are ultimately aiming to build a reconfigurable defect- and fault-tolerant computer that will conduct computations using cells measuring 20 by 20 nanometers, said Peper. There are several challenges to carrying this out, he said.

The first is to devise methods of building and configuring the cells so that they form the circuits needed to carry out computations. "The big challenge is to include this functionality... while keeping the cells simple," said Peper.

Another challenge is handling circuit faults and defects, Peper said. The researchers are aiming to deal with defects not by repairing them, but by leading signals around them, he said. To make the architecture fault-tolerant, "our aim is to find a mechanism to correct errors when cells get into erroneous states," he said.

It will be at least 20 years before the architecture is ready for use in a practical computer built with single-molecule components, partly because molecular electronics is not a developed field, according to Peper.

The asynchronous cellular automata architecture could be implemented sooner, however, using technologies whose development is further along, like quantum dots or molecular cascades, he added.

Quantum dots are nanoscale specks of semiconductor that trap one or a few electrons. They can be used for computing because the positions of electrons in a quantum dot can represent the 1s and 0s of computing, and they can affect the positions of electrons in adjacent dots. Molecular cascades, developed last year by IBM researchers, are intricate patterns of carbon monoxide molecules that can be triggered to cascade like falling dominoes. The cascade patterns can be used to carry out basic logic operations.

Peper's research colleagues were Jia Lee, Susumu Adachi and Shinro Mashiko. The work appeared in the March 20, 2003 issue of Nanotechnology. The research was funded by the Japanese Ministry of Public Management and Home Affairs.

Timeline: 10-20 years

Funding: Government

TRN Categories: Integrated Circuits; Architecture; Nanotechnology

Story Type: News

Related Elements: Technical paper, "Laying out Circuits on Asynchronous Cellular Arrays: A Step Towards Feasible Nanocomputers?" Nanotechnology, March 20, 2003 10.

Advertisements:

April 23/30, 2003

Page One

Nanocomputer skips clock

DNA motor keeps cranking

Software sorts tunes

Silver bits channel nano light

News briefs:

Tiny drug capsules shine

Degree of difference sorts data

Casting yields non-carbon nanotubes

Material makes backwards lens

Juiced liquid jolts metal into shapes

Nanotube web could mimic brain

News:

Research News Roundup

Research Watch blog

Features:

View from the High Ground Q&A

How It Works

RSS Feeds:

News

Ad links:

Buy an ad link

| Advertisements:

|

|

Ad links: Clear History

Buy an ad link

|

TRN

Newswire and Headline Feeds for Web sites

|

© Copyright Technology Research News, LLC 2000-2006. All rights reserved.