Camera sees behind objects

By Kimberly Patch,

Technology Research NewsResearchers from Stanford University and Cornell University have put together a projector-camera system that can pull off a classic magic trick: it can read a playing card that is facing away from the camera.

The dual-photography system gains information from a subject by analyzing the way projected patterns of light bounce off it.

The system can show a scene from the point of view of the projector as well as that of the camera. It could eventually be used to quickly add lighting effects in movie scenes, including the ability to realistically integrate actors who are shot separately and computer graphics into previously shot scenes.

The work also advances efforts aimed at collecting all of the visual information about a scene by sensing light scattered off objects within it and using the information to create views of the scene from any angle under any lighting condition. The ultimate goal of this area of imaging research is photorealistic virtual reality -- the visual component of the Star Trek holodeck.

The system consists of a digital camera and digital projector. The projector beams a series of black and white pixels at a scene and the camera captures the way the light bounces off objects in the scene. The heart of the system is a computer algorithm that continually monitors the data and changes the patterns in order to gain the needed information.

What happens to the patterns from the time they leave the projector to the time they are picked up by the camera "tells us how the light... interacts with the scene," said Pradeep Sen, an electrical engineering researcher at Stanford University. Each pixel of light coming from the projector might bounce off a surface, refract or hit nothing. "Each of these interactions will modify the ray of light," said Sen.

For example, imagine a ray of white light hitting a red object, said Sen. "The reflected ray will be red, which is why the object will appear red to our eyes," he said. This is the change the camera measures. "Thus whatever color we measured at the camera, we know that this is also the color we would get at the projector if we shine a white light from the position of the camera," said Sen.

This allows the researchers to measure the light changes from the projector to the camera, then reverse the light to provide a picture from the point of view of the projector. The method works because the properties of a ray of light are unchanged when the ray is reversed, a characteristic of light termed Helmholtz reciprocity.

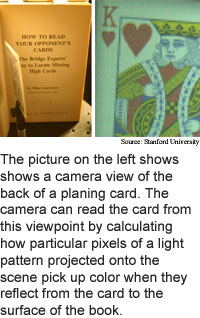

The trick to reading a playing card that is facing away from the camera is picking up light that is reflected off of a surface behind the card. "In the card experiment, the camera cannot see the card directly, but it can see the surface of the book [behind the card]; the light from the projector bounces off the card, then bounces off the book and hits the camera," said Sen.

When the projector shines on a red part of the card, like the heart of the suit, the light gets a red tint. "The camera observes it and our algorithm determines that the projector saw something red at that position," said Sen. When the camera shines on a blue part of the card, the light is blue. "In this manner, we put together the projector image pixel-by-pixel and can see the card," he said.

Producing a working system required meeting three technical challenges, said Sen. "First of all, an ordinary projector of 1,024 by 768 resolution has almost 800,000 pixels," he said. Measuring the changes to one pixel at a time would take about seven days. The researchers developed an adaptive technique that illuminated several pixels at a time to cut seven days to 14 minutes.

The system begins with all pixels lit, then divides the projector's pixels into four blocks and lights these in sequence. If some camera pixels respond to two of the blocks, the blocks are subdivided into four blocks, which are lit in sequence. The method saves time by lighting subsets in parallel.

The second technical challenge was dealing with noise and compensating for black pixels, which are not really black, but always contain some light. "When a camera takes a picture of something the values are not 100 percent exact... they have some noise, or error to them," said Sen.

To eliminate all possible light noise, the researchers' carried out their experiments in a dark room, he said. "Additional lights would add noise to the signal, which can sometimes be removed."

The third challenge is that conventional cameras have a fairly low dynamic range, or difference between the brightest and dimmest features they can capture. "You can see this effect… when you try to take a picture of people standing indoors in front of a window -- either the people are too dark and the window is just right, or the people are just right and the window is too bright," said Sen. The human eye, in contrast, has a much better dynamic range, allowing us to easily capture the details of juxtaposed bright and dark features.

To overcome the limited dynamic range of the camera, the researchers tapped existing high dynamic range techniques that stitch together pictures taken at different exposures to generate a single image with a large dynamic range.

The most practical application for the researchers' technique is in relighting movie scenes, said Sen. "Suppose you're making a new sci-fi movie and you wanted to have your hero actor in a spaceship." Nowadays the spaceship is likely to be a computer model rather than a big set, and convincingly putting the hero into the scene involves modifying the lighting of both the scene and the hero, he said.

"Any object inside a specific environment will assume some of the color of the environment," Sen said. The effect can be subtle, but the human eye is very good at noticing if something is missing. "For example, when an actor is inside a volcano, the actor will get a reddish tint or glow to their skin... in a rain forest there will be a greenish tone."

In addition, a computer-generated character will sometimes cast shadow onto the real actor. "This lighting is often not available at the time they filmed the actor, so they have to relight the actor afterwards on the computer," said Sen.

The matrix of light properties captured by the researchers' technique contains all the information necessary to do high-resolution relighting and has the potential to do so much more rapidly than conventional techniques, said Sen. The researchers' current prototype works with static images, but could eventually be applied to moving pictures as well, he said.

The technique could eventually find use in medical imaging as well, said Sen. Some of today's medical imaging techniques are subject to the same reciprocal properties as dual photography, he said. "But we still need to look into this more seriously," he added.

The researchers are aiming to eventually be able to capture enough information to change the viewpoint of a scene after it has been filmed. This could potentially provide a new class of special effects for films and also a technique to make photorealistic virtual environments that can be used for training, said Sen. "While the obvious uses of these are for entertainment, they can be used to train personnel -- e.g. firefighters -- or to prototype products in manufacturing."

This requires measuring how a full light field is reflected by a scene over time. The challenge is being able to capture the vast amounts of information needed.

The dual-photography technique could be used to relight films in five years, said Sen. It will be 10 or 15 years before it is practical to work with the large data sets needed to use the technique to change the viewpoint of a movie scene after it has been filmed, he said.

Sen's research colleagues were Billy Chen, Gaurav Garg, Mark Horowitz, Marc Levoy, and Hendrik P.A. Lensch from Stanford University and Stephen R. Marschner from Cornell University. The researchers are scheduled to present the work at the Association of Computing Machinery (ACM) Special Interest Group Graphics (Siggraph) 2005 conference, held in Los Angeles July 31 to August 4. The research was funded by Nvidia Corporation, the National Science Foundation (NSF), the Defense Advanced Research Projects Agency (DARPA) and the Max Planck Institute in Germany.

Timeline: 5 years; 10-15 years

Funding: Government, Private

TRN Categories: Computer Vision and Image Processing; Data Representation and Simulation

Story Type: News

Related Elements: Technical paper, "Dual Photography," scheduled for in the Association of Computing Machinery (ACM) Special Interest Group Graphics (Siggraph) 2005 conference, Los Angeles, July 31-August 4.

Advertisements:

June 1/8, 2005

Page One

Stories:

Camera sees behind objects

Movie captures trapped light

Speedy photon detector debuts

How It Works: Computer displays

Briefs:

Going nano boosts thermoelectrics

Magnetic resonance goes nano

Lasers built into fiber-optics

Nano LEDs made easier

News:

Research News Roundup

Research Watch blog

Features:

View from the High Ground Q&A

How It Works

RSS Feeds:

News

Ad links:

Buy an ad link

| Advertisements:

|

|

|

TRN

Newswire and Headline Feeds for Web sites

|

© Copyright Technology Research News, LLC 2000-2011. All rights reserved.